HPCC is a big data tool developed by LexisNexis Risk Solution. It was developed by Embarcadero Technologies.

Receives data from the source converts the data into a format comprehensible for the data.

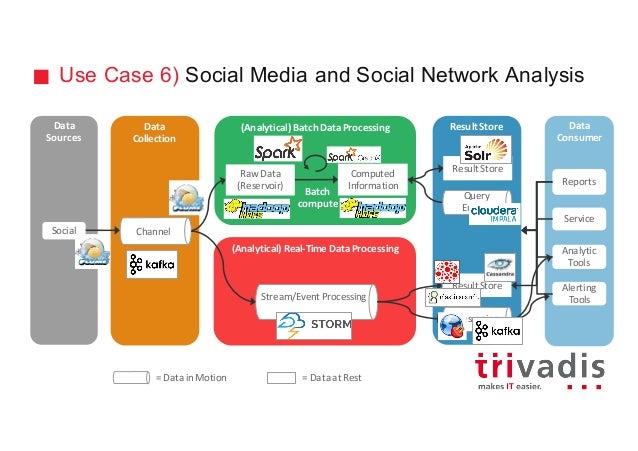

Big data architecture tools. Apache Storm is an open-source big data tool distributed real-time and fault-tolerant processing system. It efficiently processes unbounded streams of data. By unbounded streams we refer to the data that is ever-growing and has a beginning but no defined end.

Neo4j is one of the big data tools that is widely used graph database in big data industry. It follows the fundamental structure of graph database which is interconnected node-relationship of data. It maintains a key-value pattern in data storing.

Notable features of Neo4j are. Top 15 Big Data Tools for Data Analysis 1 Xplenty. Xplenty is a platform to integrate process and prepare data for analytics on the cloud.

It will bring all. Apache Hadoop is a software framework employed for clustered file system and handling of big data. HPCC is a big data tool developed by LexisNexis Risk Solution.

It delivers on a single platform a single architecture and a single programming language for data processing. Big data architecture. Tools and technologies for big data include those for other enterprise information management activities.

Enterprise content management or document management data warehousing and business intelligence including data visualization data analytics data integration metadata management and data virtualization. The need for tools in support of these. Big Data is a term encompassing the use of techniques to capture process analyse and visualize potentially large datasets in a reasonable timeframe not accessible to standard IT technologies By extension the platform tools and software used for this purpose are collectively called Big Data technologies.

The modern big data technologies and tools are mature means for enterprise Big Data efforts allowing to process up to hundreds of petabytes of data. Still their efficiency relies on the system architecture that would use them whether it is an ETL workload stream processing or analytical dashboards for decision-making. ERStudio is a tool for data architecture and database design.

Data architects modelers DBAs and Business analysts find ERStudio useful for creating and managing the database designs and reusing of data. It was developed by Embarcadero Technologies. Big data management data processing process chart Big data refers to the data set that can not be captured managed and processed by conventional software tools within a certain period of time.

It needs a new processing mode to have stronger decision-making power and insight. The main process of big data processing includes data collection. Modern un i fied data architecture includes infrastructure tools and technologies that create manage and support data collection processing analytical and ML workloads.

Building and operating the data architecture in an organization require deployments to cloud and colocations use of several technologies open source and proprietary and languages python sql java and involves different. There are many great Big Data tools on the market right now. To make this top 10 we had to exclude a lot of prominent solutions that warrant a mention regardless Kafka and Kafka Streams Apache TEZ Apache Impala Apache Beam Apache Apex.

All of them and many more are great at what they do. However the ones we picked represent. Big data architecture is the overarching system used to ingest and process enormous amounts of data often referred to as big data so that it can be analyzed for business purposes.

Big Data Architecture Layers Big Data Sources Layer. A big data environment can manage both batch processing and real-time processing of big data. Management Storage Layer.

Receives data from the source converts the data into a format comprehensible for the data.